"The ChatGPT moment in Robotics" Nvidia Keynotes leading the AI revolution in 2025

From AI Agents to Physical AI and Humanoid Robots Nvidia CEO, Jensen Huang kept the crowd engaged with his vision on next Trillion-Dollar Investments.

Last night at CES 2025 Day 1, Jensen Huang, the President, co-founder, and CEO of Nvidia, delivered the keynote announcing the next wave of AI trends.

He highlighted Nvidia's vision and long-term strategic investment in unleashing AI's unexplored potential.

The session has lit up the internet. Everyone is talking about it.

A series of announcements covers technology trends, new AI Frameworks, the next big thing in AI, and how the Nvidia platform is getting ready to equip the tech industry to adopt and develop next-gen AI applications.

Here are the two key takeaways from my viewpoint

A Paradigm shift phase to embed AI anywhere

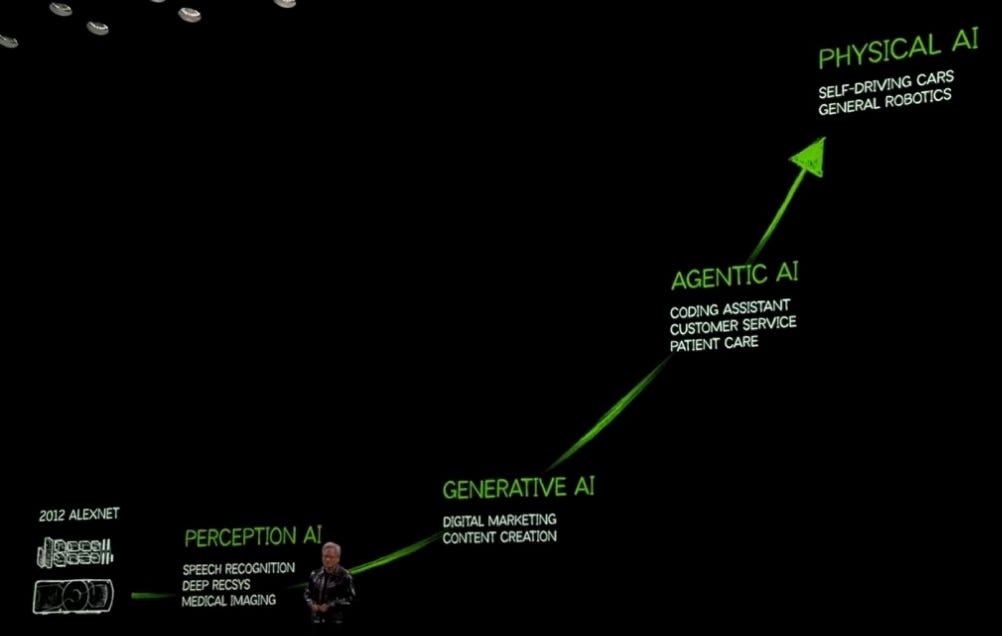

Since Generative AI(GenAI) is trending as a technology wave, AI is evolving faster than ever.

The journey from hand-coding and scripting instruction sets to the computational advancements of using Transformers from Google in 2018 has revolutionized the AI and computing world.

The transformer approach has made it possible to analyze data in any form - text, videos, sound, images and transform it into anything.

Nvidia's technology has helped build AI models at a 'scale.' Generating synthetic data, performing transformations using faster CPUs and GPUs, and using better algorithms to train/ retrain data for reliable results, Nvidia's technology has helped build faster GenAI applications.

The AI models further augment the trained data with reinforcement learning to make it an authentic and reliable source of information with additional AI capabilities.

GenAI was released in November 2022 and has rapidly gained adoption in many daily applications, such as content creation, user preferences, behavior, and sentiment analysis for personalized recommendations.

So what is next - beyond GenAI and AI models?

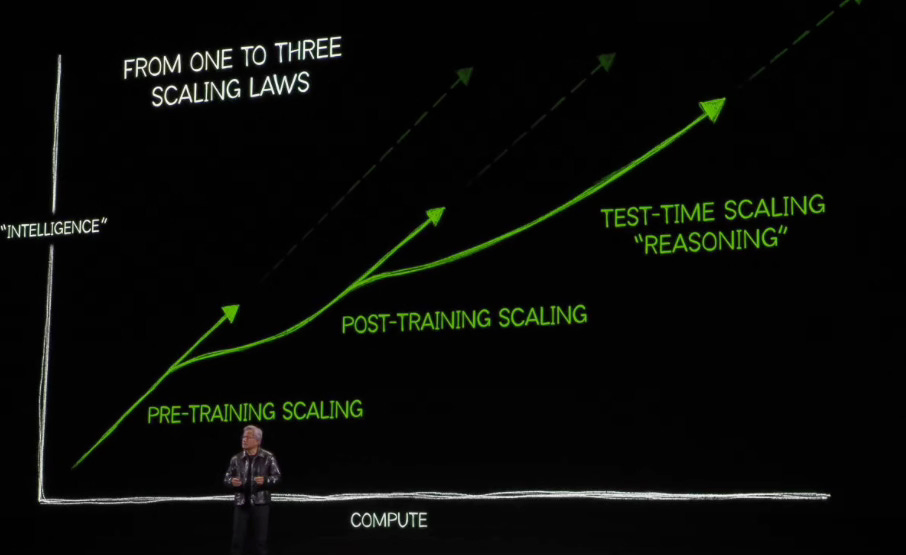

In his keynotes, Jensen Huang explained the scaling laws. With the ability to transform data and train/ retrain AI models, we are advancing to the next phase of Test-Time Scaling to make the AI models more robust and generate more accurate results through reasoning.

With accuracy in AI models, the next phase of AI advancement beyond GenAI is possible. It is Agentic AI - the third wave of AI.

Agentic AI, unlike GenAI, which is reactive based on a prompt, Agentic AI or AI Agents are proactive and autonomous.

One promising application of AI Agents that we saw is Digital Workers, who are AI-powered agents designed to function as virtual employees, perform specific tasks autonomously, and collaborate with humans.

Agentic AI takes automation to the next level by combining AI's flexibility with domain-specific capabilities for a wider range of applications.

Digital Workers will create the following core trends.

Workforce augmentation -

The key is not to replace employees but to augment them by taking over mundane or intensive tasks so humans can focus more on creative tasks.

Example - data entry, reporting, customer service, and monitoring & alerting

AI-Driven IT management -

The future of the IT department will involve managing digital agents by optimizing them for faster adaptability through continuous & reinforcement learning from training data for better aligning digital agents to business goals.

The next Trillion-Dollar Opportunity…

Jensen Huang believes this is the next Trillion-Dollar Opportunity, with many industries adopting a Digital Workforce driven by AgenticAI.

Many AI-native startups will emerge to develop this ecosystem and deploy Agentic AI at scale.

“The ChatGPT moment for general robotics is around the corner”

Physical AI—fourth wave of AI advancement

The representation of hyper-converged AI with a physical world for seamless interaction with humans and surroundings

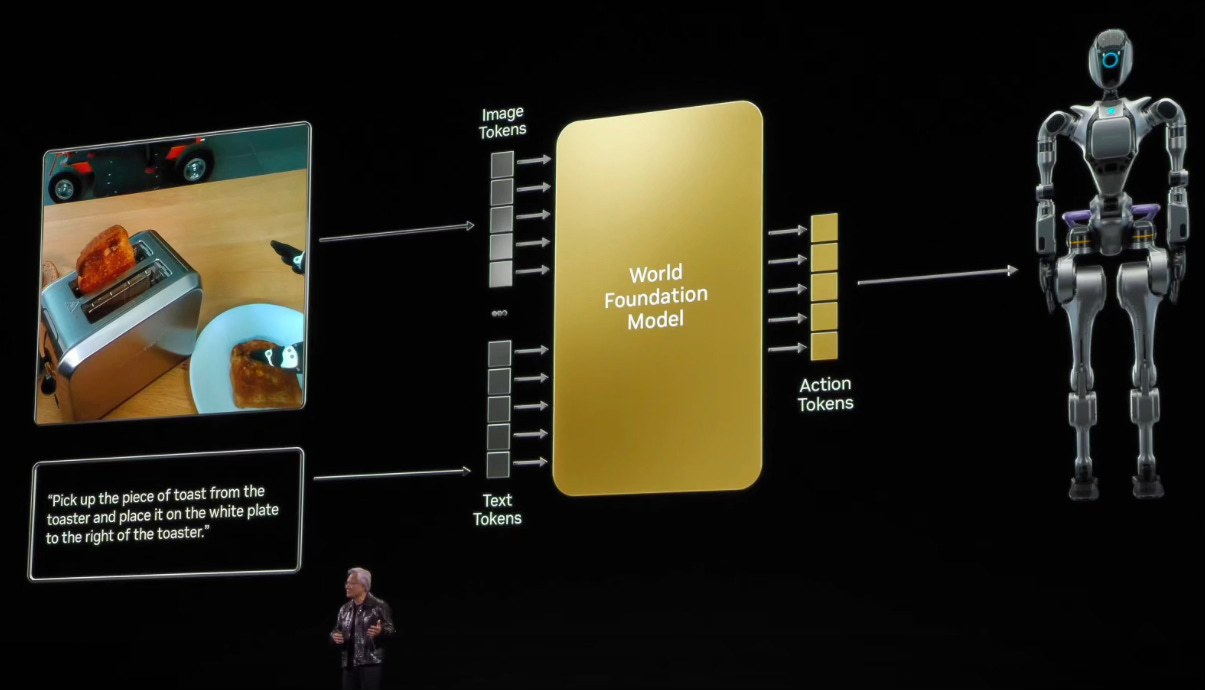

Traditional AI models take text, sounds, images, or videos as input and transform them into anything.

Physical AI takes advantage of spatial computing, augmented, and virtual reality (AR/VR) to help recognize the surroundings, build relationships, and handle objects using artificial vision and touch to take necessary actions.

The application of Physical AI is to enable the next level of automation, unleashing industrial digitalization by understanding dynamic work environments with reduced human interventions. This will enable fully autonomous self-driving vehicles that adopt and respond to real-time road conditions and fully autonomous robots to perform complex tasks.

According to Jensen, Physical AI is a space to watch as an early long-term investment strategy.

Physical AI models will have to undergo a paradigm shift from text/image-based inputs to "Action Token"-based inputs from physical actions like moving an object or having an awareness of space and connection between the object and surrounding.

Just like the transformers helped provide a framework to build an AI model at scale, Physical AI frameworks like NVIDIA Cosmos aim to provide a framework to develop World Models that ingest multi-model inputs, generate spatial awareness, and provide a real-time simulation environment at lower cost.

Physical AI plays a key role in Humanoid Development, and hence, it is a key strategic investment for Nvidia.

Humanoids are robots designed to resemble and mimic human traits. They work alongside humans in various industries, such as healthcare, manufacturing, and personal assistance.

Along with Physical AI models that will offer foundational world models to understand spatial relationships, the AI frameworks built on next-gen Nvidia hardware, in collaboration with their partners, will provide capabilities to process natural language, decision-making, and multi-model inputs.

In summary, Nvidia CEO presented the company's vision and key strategic investments, i.e., next-gen technologies, to build and scale investments for its customers and partners.

A paradigm shift from Gen AI to Agentic AI, the next evolutionary phase, allows us to unleash autonomous AI agents like the digital workforce.

Bringing spatial awareness and world foundational models with multi-modal inputs will enable building technologies powered by Physical AI and beyond to mimic human traits and general robotics.

The next Trillion-Dollar opportunity where next-gen startups will focus their development on Humanoid and Workforce augmentation.